In the realm of artificial intelligence and natural language processing, large language models have brought unprecedented capabilities to the table. However, to truly harness their power and unlock their potential, one crucial factor plays a significant role – prompt engineering. In this blog, we embark on a journey to explore the prompt engineering techniques that empower us to interact with language models more effectively, creating a seamless and personalized user experience.

Importance of Prompt Engineering

Advanced Prompt engineering serves as the cornerstone of effective communication with language models. The way we frame queries significantly impacts the responses generated. A well-crafted prompt not only ensures accurate and contextually appropriate results but also enables the model to align with specific requirements, leading to enhanced user satisfaction. By mastering prompt engineering, we open up a world of possibilities where language models become versatile assistants catering to our unique needs.

Prompt Engineering Techniques

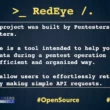

1. Instructional prompts

Instructional prompts provide explicit guidance to the language model, telling it exactly what is expected. These prompts allow users to define the desired format, style, or even specific details they want the response to contain.

Let’s consider an example:

Prompt: “In a humorous tone, write a short paragraph about a mischievous cat who loves to play pranks on its owner.”

Response:

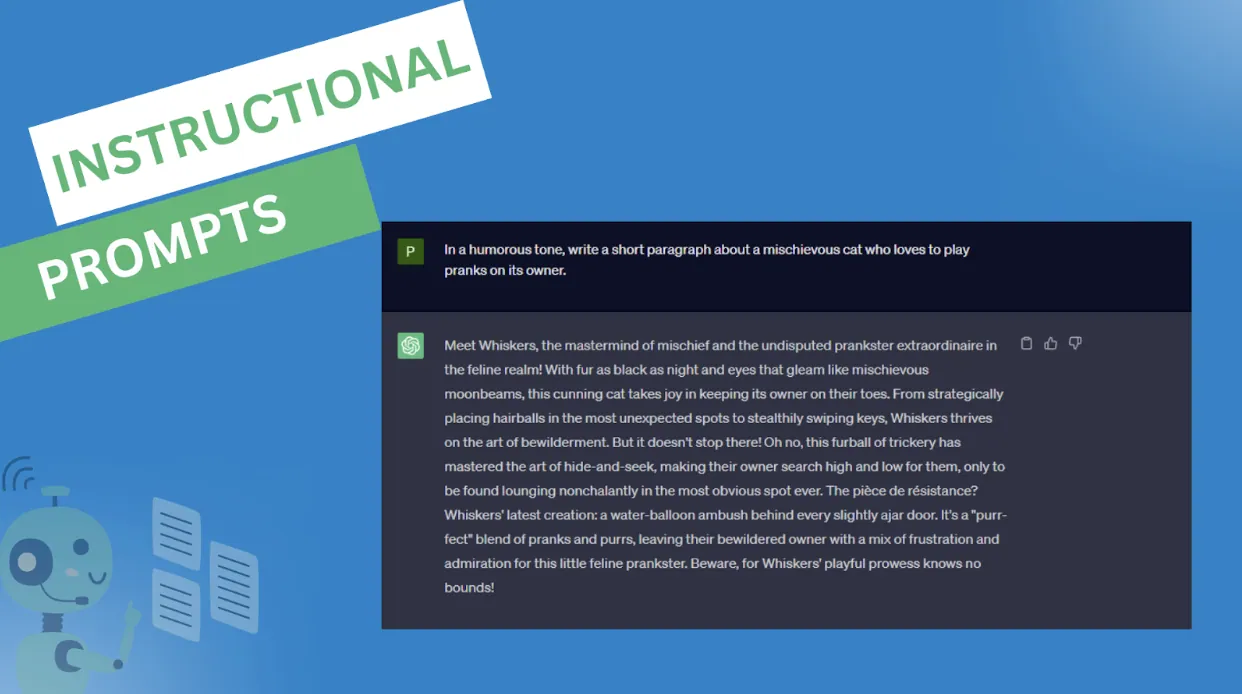

2. Contextual Prompts

Contextual prompts offer background information or previous interactions to the language model, helping it maintain coherence in its responses. Contextual prompts are particularly useful for multi-turn conversations and dialogue-based applications.

For instance:

Prompt: “You are a virtual travel assistant. The user previously mentioned that they are interested in exploring beaches. Provide them with a list of top beach destinations in the world.”

Response:

By incorporating the context of the user’s preference for beaches, the language model can deliver more relevant and tailored recommendations.

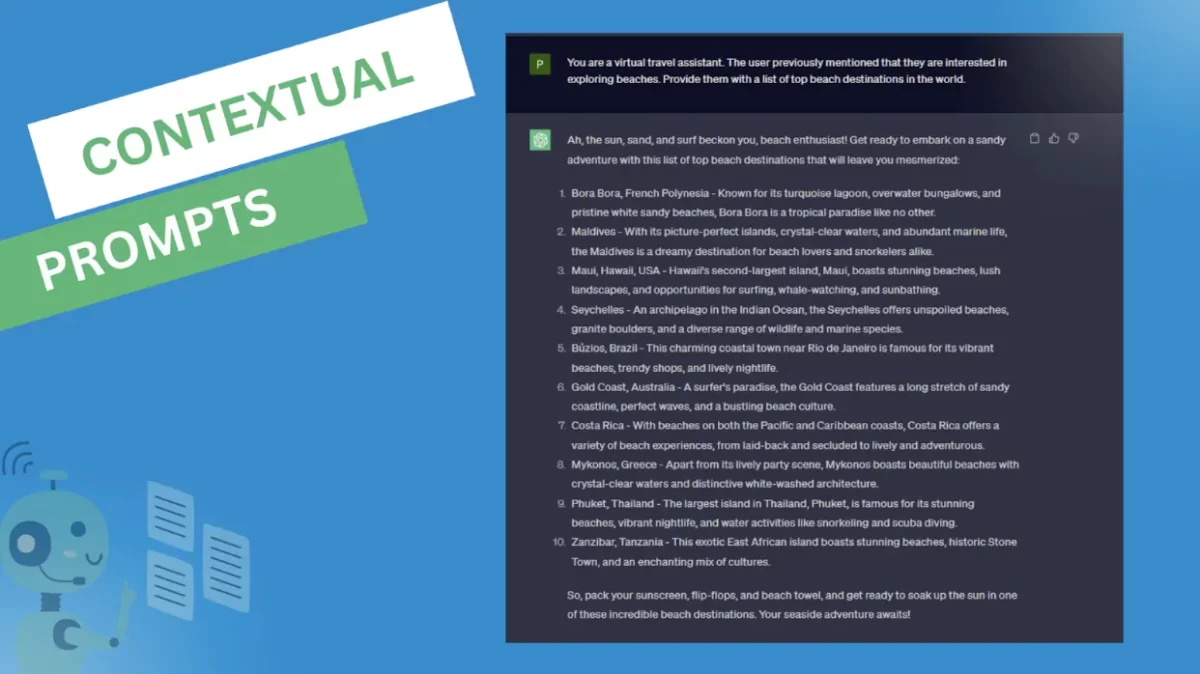

3. Few-shot learning

Few-shot learning allows language models to learn from a small number of examples.

For instance:

Prompt: “Translate the following sentences into French: 1. Hello, how are you? 2. Thank you for your help.”

Response:

With just two examples, the language model can quickly grasp the translation task, showcasing its adaptability and efficiency in handling new tasks with minimal training data.

4. Multiple turn interactions

Crafting prompts as multiple turn interactions enables a more dynamic and interactive conversation with the language model. This technique is highly beneficial for applications where users engage in back-and-forth exchanges.

For example:

Prompt:

User: “What is the capital of France?”

Model: “The capital of France is Paris.”

User: “Tell me more about its history.”

Response:

Through multiple turn interactions, the language model maintains the conversation flow, providing a continuous and informative experience.

5. Prompt-tuning

Prompt-tuning involves fine-tuning the prompts based on user feedback and performance evaluation. It is an iterative process that refines the prompts to elicit more desired responses. Prompt-tuning allows us to optimize the model’s behavior to cater to specific applications.

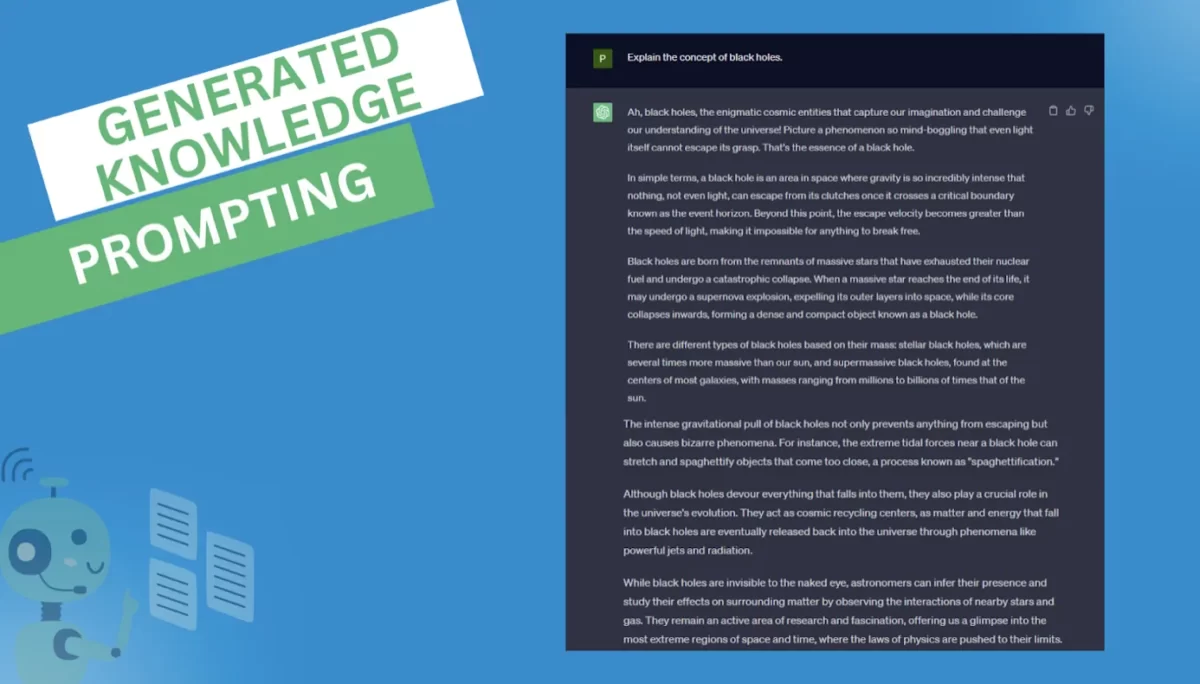

6. Generated Knowledge Prompting

Language models possess a vast knowledge base. By prompting them to generate knowledge-backed responses, we can utilize their expertise to provide well-researched and informative answers.

For instance:

Prompt: “Explain the concept of black holes.”

Response:

Prospects

The future of prompt engineering is promising. As research in natural language processing progresses, we can expect more sophisticated techniques that offer greater control and precision over language model outputs. This could lead to more intuitive interfaces, personalized user experiences, and applications that cater to a wide range of domains.

Mitigating risks ethics, and potential benefits

While prompt engineering unlocks immense potential, it also brings ethical considerations. We must be cautious to avoid generating biased or harmful content. Implementing rigorous content moderation, human-in-the-loop validation, and adhering to responsible AI practices are vital in ensuring the safe and ethical use of language models.

The potential benefits of prompt engineering are far-reaching. It empowers individuals and businesses to utilize language models effectively without needing extensive technical expertise. Applications that incorporate prompt engineering can lead to enhanced productivity, personalized interactions, and improved customer experiences.

Conclusion

Prompt engineering techniques are the key to unlocking the true potential of language models and revolutionizing human-machine interactions. By providing clear instructions, context, and personalization, we can transform language models into powerful and adaptable tools that cater to diverse applications.

Embracing ethical practices and staying at the forefront of research will pave the way for a future where language models truly become indispensable assistants in our daily lives. As we continue to refine and innovate prompt engineering, we embark on an exciting journey of discovery and progress in the world of AI and natural language processing.